The art of the illusion with photogrammetry

The Problem

This was one of the most amazing finds of the last year for me. As someone with no real classical artistic talent, I find the idea of taking real world assets and getting them into computers fascinating.

There are many different ways of doing this and processes for it. There is a way of animating called Rotoscoping in which you take a stream of pictures and trace movement to get a life like feel for animation.

Rotoscoping

Rotoscoping

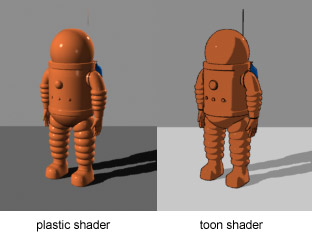

There is cel shading which is the idea of taking an image and transforming it into something that looks more two dimensional and flat.

Of all of them, the most magical to me has always been photogrammetry.

The science of making measurements from photographs, especially for recovering the exact positions of surface points. Photogrammetry is as old as modern photography, dating to the mid-19th century and in the simplest example, the distance between two points that lie on a plane parallel to the photographic image plane, can be determined by measuring their distance on the image, if the scale of the image is known.

In 2008 Microsoft released a service in beta called PhotoSynth. I recall the service being deemed as the next big thing in Photography and it was truly magical, given enough two dimensional normal camera photographs it would calculate a 3d point cloud which could be used to model the images in 3d space, much like 100’s of Minecraft blocks. There was an idea, that one day, you would be able to take you one photo and with the massive resources of PhotoSynth behind you, you could augment your photo’s and have them turn into 3d models.

I took my ~200 photo’s of a statue in Cannizaro Park of Dianna and the Fawn and I turned these photo’s into the most amazing 3d model, which I could spin around and view from absolutely any angle, it was like magic.

PhotoSynth

PhotoSynth

Unfortunately this was not to last and the service got degraded in time, to be a (very good) photo stitching service.

The problem was with this service gone, there was no easy way for me to take my beautiful flat 2d images and turn them into a fantastical amazing model and they sat, dormant, sad and unanimated since 2010.

Diana and the Fawn

Diana and the Fawn

The Solution

Fast forward to a week ago and I found a wonderful program called Meshroom by Alice Vision.

Meshroom

Meshroom

This program promised the same goodness as Microsofts PhotoSynth, but all run locally using the power of your single local computer.

I was able to bring my images back to life using Meshroom and Blender.

Blender

Blender

And following an amazing video by CG Geek.

&feature=youtu.be&t=1180

Loading my ~200 images into Meshroom with default setting and hitting go resulted in a lot of waiting, but a pretty spectacular result.

A small sub section of the images

A small sub section of the images

Loaded into Meshroom

Loaded into Meshroom

And two hours later I had a 3d model with texture mapped onto all created from my 2d images.

There’s a couple things that are important using this technology :

- Good high quality images, without blur

- A camera which is known by Meshroom or willing to add it.

- About 60% overlap between photos to allow the algorithm to work

- Willingness to learn a bit of Blender (which was pretty complicated for me)

- Patience

The most rewarding part of this project for me was the ability to bring back to life my photo’s from a previous project of mine, they were uploaded to Google Photo’s at some point and there they have sat for 8 years. They are stored using the free version which does introduce some additional compression, but they seem to have weathered time well enough.

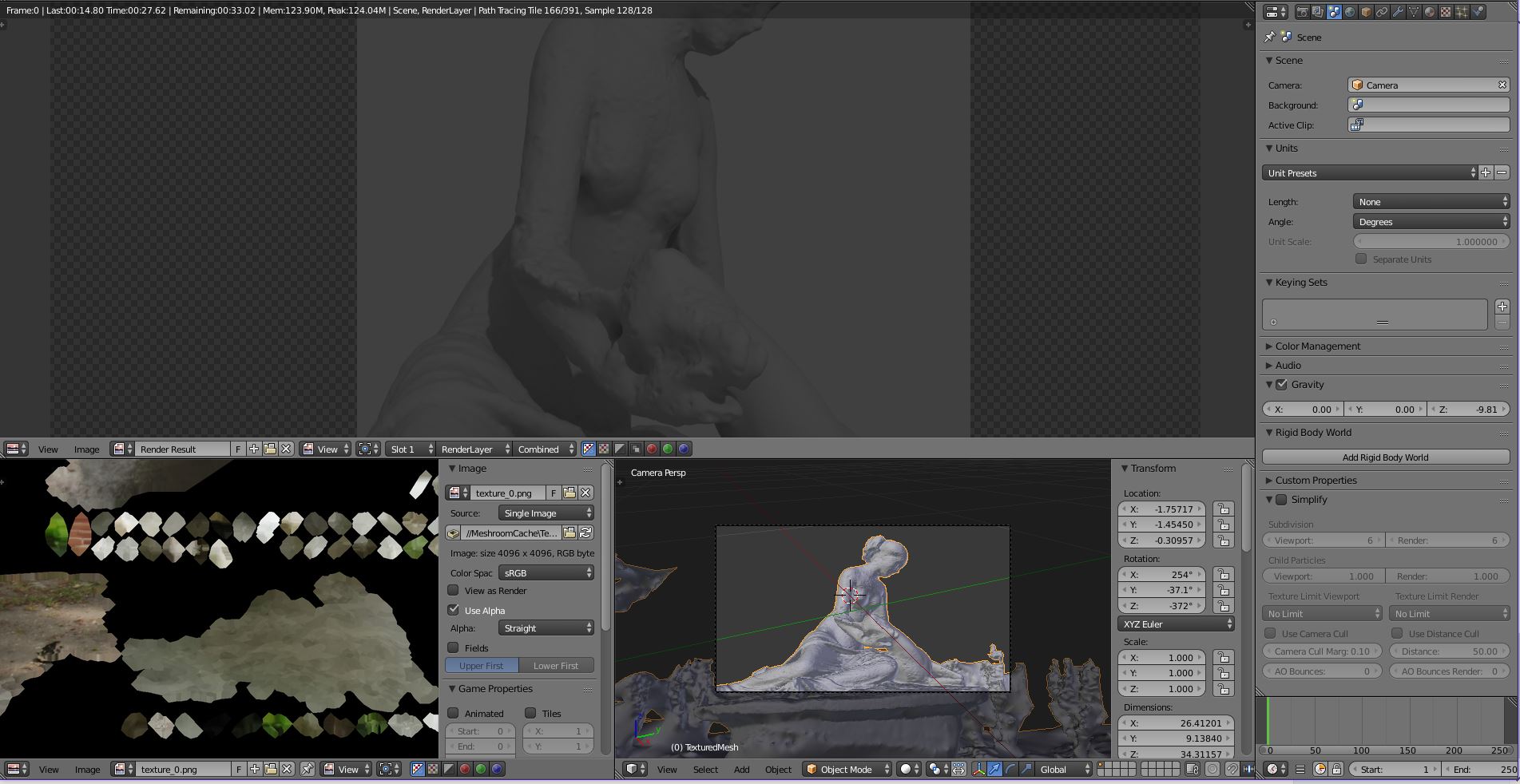

Once I imported the Obj Model from Meshroom into Blender I was greeted with a lot of complicated screens and information.

There a 3D Model which included some of the surroundings and 4 texture atlasses for the scene which is essentially a flat texture with information for how to project it onto your model, kind of like throwing a carpet over a manequin but doing it in a specific way everytime to end up with the same result.

I was very pleased with the model.

3D Wireframe Model

3D Wireframe Model

And the texture look wasn’t looking too bad either

Textured in Blender

Textured in Blender

Unfortunately my final rendered output was looking like it was in need of a bit of attention.

A very cement look and a lot of additional scenery

A very cement look and a lot of additional scenery

I was able to animate this and get a result out into video, which I was very pleased with

But there was more work to be done, the grounds around the statue didn’t look very good, but it was a quick step to remove all that additional scenery and put some lights into the scene to polish it up.

Since I had taken these photos in normal daylight without a flash, the idea was to light the model up as evenly as possible, but not introduce any new shadows, which was just a check box inside Blender for the lights.

And this took me to my final output

&feature=youtu.be

This video is a more dynamic pan around to show that I can now view any angle I want, and while it is a little too shiny still, it does represent the statue much better now.

Where to from here

The next steps for me are to see if Meshroom can map an indoor area properly with better photo’s. Indoors pose a unique problem that a lot of these algorithms work on the texture or object to work out how they are moving in a scene and single colour painted walls do not represent well, so you need to have interesting rooms if you hope for this to succeed.

I think it is amazing how far this technology has come, and what you can now do with Open Source projects which are out in the wild for anyone to try. I’m quite keen to see about how much of my world I can map.

With mapping technology like Google Maps and Bing Maps, how long is it until they can apply this to our StreetView photos and give us a literal like for like 3D View of what we would see and could walk around.

How much more immersive would Google Earth be with technology like this behind it ?

They are using similar technology already, but if you could source ground level data, this could essentially turn our whole world Virtual.

Imagine deciding to play Grand Theft Auto or Just Cause and choosing your last holiday as the location or your local neighbourhood, because no longer are the artists going to be constrained by the environment, they’re going to focus on making dynamic stories which adapt to the environment you choose to play in.

We’re not quite there yet, but how about Tower Defence on Google Maps